Efficient, Reliable, and Secure Data Storage using Pug and AES Crypt

June 14, 2025

Among the many things I do, I manage several Linux systems (including NAS) that need to be reliably backed up. Like many people, I used “tar” to create compressed archives of the data. The step after that was to encrypt it locally using AES Crypt. Once the archive file was encrypted, I would then store it in cloud storage or physical media I can store offsite. Encryption was (and still is) an important part of the process. It is vital that I hold the keys, because I cannot be sure who can access files after they are out of my direct control. (Anyone trusting a cloud provider to keep files secure should rethink that security strategy.)

While “tar” severed well for most things, it was a bad solution for the many thousands of files I managed. And while tar does allow for incremental backups, it can be painful to deal with as the number of increments grows. Retrieval of files across a set of incremental backups was torturous.

Archiving the entire set of files repeatedly was inefficient and expensive, and tar’s incremental feature did not really address my needs. I wanted a better solution with the following capabilities:

- Archive each distinct file only once, even if stored locally many times

- Allow multiple revisions of any given file to be archived

- Expire old revisions after some defined time

- Retain deleted files for a period of time to allow for recovery

- Don’t hastily back up new or changing files, as multiple midday edits are not important

- Compress files before archival

- Securely encrypt files before archival

- Enable retrieval of any specific file or revision

- Enable the recovery of all archived files

- Allow revision counts and retention rules to vary depending on the classification of files (e.g., directory or set of directories)

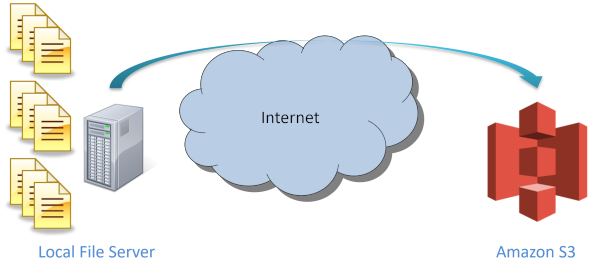

What I came up with is software designed for use by talented IT staff called Pug. Pug is published as free software, and the source code is also available. It can be run directly on a Linux machine, or it can be run inside a container. I run Pug inside a container on my own NAS, which allows me to keep all important data files securely backed up directly on the server hosting the data.

Pug checks off every single one of the requirements I had, including the ability to securely encrypt files before uploading them to cloud storage. Using AES Crypt for security was an important requirement, and I even made it possible to perform key rotation. That is also an important aspect of any secure solution.

Pug was written over a decade ago. So why am I writing a blog post about it now? The reason is because I was thinking about the fact that for over a decade now, my systems have been securely backed up with virtually no intervention on my part. I almost forget it’s running, except for when I periodically introduce a new encryption key for the software to use or when I replace the NAS server with a new one. Pug has been rock solid for years and, while it’s a bit too complex for most hobbyists to use – or even some inexperienced IT staff – it is an excellent solution for people who want a good backup solution and have a similar set of requirements that I had when I set out to write Pug.

In full disclosure, though, I still use “tar” (pipelined to AES Crypt for security) when backing up some files on Linux machines, system configuration, etc. Such backups are relatively small. I will use the right tool for the task. It’s just that “tar” was a terrible solution for the massive number of user files I had to keep securely backed up.

Permalink: Efficient, Reliable, and Secure Data Storage using Pug and AES Crypt