Backing Up Files to Amazon S3 Using Pug

May 26, 2013

Like many other people, I have a need to do routine backups of my data. And like many others, I have to concern myself with not just my own files but, but everyone’s files on the network. Backing up data can be a mind-numbingly boring chore after a while, only made worse by the fact that I really have better things to do than to deal with backing up data frequently.

Years ago, I used to back up data to magnetic tape. Yeah, that was a long time ago, but it helps put things into perspective. I’ve had to deal with this problem far too long. I graduated with time from magnetic tape to other external storage devices, most recently being USB drives.

I tried a variety of techniques to backing up data, including full data backups to incremental backups. Incremental backups are so nice to perform, since they require far less time. However, if you have ever had to restore from an incremental backup, you know how painful that can be. You have to restore the main backup and then each individual backup. And it’s only made worse when what you need to restore is just one user’s files or a single file.

There is also the hassle of dealing with physical backup devices. You cannot simply store those on site, because they are subject to damage by fire, water sprinklers, etc. So periodically, I would take drives to the bank for storage in a safe deposit box. That just added to the pain of doing backups.

What I wanted was a backup solution that met these requirements:

- I wanted a fully automated solution where I didn't have to be bothered with physical storage devices, storing physical drives in a bank vault, etc.

- Once put in place, I wanted a solution that "just worked" and was fault tolerant

- I wanted a solution that was secure

- I wanted a solution that would work incrementally, storing only new or changed files each day (or even each minute)

- I wanted a means of storing multiple versions of files, any one of which I could retrieve fairly easily

- I wanted a solution that would not waste space by storing the same file multiple times (which is a problem when multiple users have the same copy of the same file)

- I wanted a solution that would preserve locally deleted files for whatever period of time I specify (i.e., if a user deletes a file, I want to be able to recover it)

- I wanted a solution that would allow me to recover any version of a file for some period of time

- I wanted a solution that I could rely on even in the face of a natural disaster

Fortunately, cloud storage options came along, which had the potential for meeting many of the above requirements. Perhaps most popular among those cloud storage options is Amazon S3. Equally important, Amazon S3’s pricing is fairly reasonable. If one has 100GB of data to store, for example, the cost of storage is US$9.50 per month today. Amazon also has a very good track record of reducing the price of cloud storage as they find ways to reduce those costs, making it even more attractive.

The challenge I had was finding the right software.

I found several packages that would synchronize an entire directory with S3, but that did not meet many of my requirements. For example, if a user accidentally changed a file and then then the sync took place, the original file was lost forever. Likewise, I would not want an accidentally deleted file to be removed from cloud storage.

I also found tools that would create full backups and store those in the cloud. However, that approach is extremely costly in terms of storage and bandwidth. I also found some that worked incrementally, wherein one large backup is made and then only changes were made. The problem with that is that restoring the files meant that downloading the main backup file and then every single incremental backup. That’s painful even doing a full restore, but horribly painful when trying to restore just a single file.

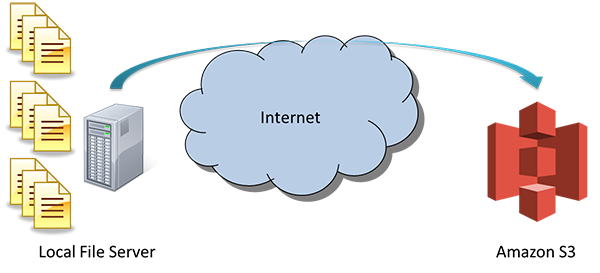

So not finding a tool that really met my needs, I decided to write my own. The result is a backup program I call Pug. Pug replicates new and changed files to the cloud as they are discovered and deletes files from the cloud when they are deleted from local storage. Importantly, controls are given to the administrator to allow any number of versions of files to be maintained in cloud storage and to maintain deleted files for whatever period of time one wishes. Thus, I can maintain a complete history of all files stored in local storage and retrieve any single one of them.

In practice, I do not keep all versions of all files. While Pug will allow that, I keep at most 14 revisions of files. And if a file is deleted, I will keep the deleted file around for 90 days. These are just my configured policies, but you get the idea. Pug takes care of everything automatically. The intent is to have a flexible solution that will work in many enterprise environments.

Pug also meets my other requirements in that it never uploads the same file twice, regardless if there are 100 copies on the network. Before uploading to Amazon S3, it compresses files using gzip and then encrypts them using AES Crypt. This provides for bandwidth efficiency and security. (Note: do make sure you keep AES Crypt key files well-protected and stored somewhere other than Amazon S3 in plain view!)

I have been using Pug for a while and I’m quite pleased with the results. I no longer have to deal with routine backups to physical devices, the system automatically scans all locations (local file system files and files on NAS devices) looking for files to upload or delete, and, of course, the data is securely maintained off-site.